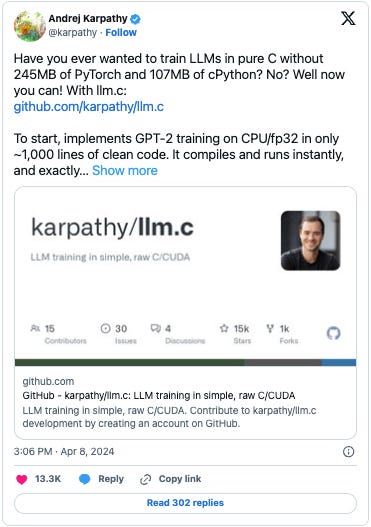

On April 8, 2024 Andrej Karpathy (the G.OA.T of DL/GPT educational content) embarked on a project called llm.c to implement GPT training & inference in bare-metal C & CUDA language, instead of using prominent enablers like PyTorch.

I’m a huge fan of his let’s build from scratch to understand our way around it philosophy as it not only illuminates the internals of any technology by going over to first principles, but also provides a refreshing educational experience, one that stays along with us forever.

At the time of drafting this page, llm.c project has about 16.6K ⭐️s and 1.7K ⤴️s already and even have notable extensions such as mojo 🔥 and rust 🦀. So it is very timely for us to get involved in this project and sharpen our knowledge knives to dissect the GPT family of models and enlighten ourselves.

There used be a saying that goes like this..

If you like to learn a concept, read it thoroughly…

If you like to understand a concept, re-write it in your own words…

If you like to master a concept, teach it to somebody else…

I’m going to take the 3rd route to help myself master the concept by taking Y’all through this dissecting journey. While the project is currently devoting its time to hone the CUDA implementation and support to other GPUs, I for one come from the GPU poor ecosystem. Hence I’m confining myself to explore the CPU implementation of GPT-2 model from the ground-up. We live to conquer the GPU story another day.

Your feedback to this series would go a long way to push me to publish a GPUonly series. Let’s C 🤞🏻🤞🏻 (no pun intended for ‘C’)

Over this series, I will start by explaining how a neural network is able to transform itself (C’mon seriously, again no pun intended 🥸) to a LLM, what is the role of tokenization and its pervasive impacts to both training and inferencing stages, how the data flows through different layers of the transformer architecture and finally we will dissect the train_gpt2.c file to understand how Mr Karpathy has implemented GPT-2 in a very clean and concised manner.

Chapter Organization :

Following is how I intend to structure this series in 6 little chapters, which each chapter published as a Substack post :

Chapter 0 (this post) - Series Announcement & Chapters Introduction.

Chapter 1 - Introduction to Neural Network, Early Implementations of Language Models (RNNs) and the evolution journey to GPT Architecture.

Chapter 2 - Introduction to Tokenization and its importance along with impacts

Chapter 3 - Probable Deep Dive on the GPT Architecture and special focus on the GPT Layers

Chapter 4 - Dissecting Karpathy’s

train_gpt2.cfile and a quick tour on how to set it up and run your own GPT-2 modelChapter 5 - Concluding the series with a decent overview on Model augmentation techniques like Fine-tuning(PEFT, Q/LoRA), RAG, Local AI assistant development journey.

I understand some chapters might be repetitive from some of those who is reading this and some may even think if it is necessary. Well the answer to that is, I’m doing this for me to master my knowledge on those items and I certainly feel the need to keep them to have a convincing comprehension of this topic. If you feel the need to skip a specific chapter, please feel free to hop on directly to the chapter that interests you. Although I would recommend to continue with this organization since I have poured considerable editorial thoughts to organize this way to aid my narration, I can certainly understand why you would choose otherwise. I’ll try my best to author each chapter to be consumed in its own right so that the readers won’t lose much if they are picky!

While you are here, I would like to ask your assistance in filling this survey (if you may) to collect your valuable inputs regarding shaping this series.

That’s all for now and catch me again in the next post where we will start with our Introduction to NN, RNNs and Evolution towards GPT Chapter in this series!