Local Packet Whisperer (LPW) - LLM Powered Packet Analysis

Read this post to know about a new project I launched to explore how LLMs can help with network analysis & troubleshooting

Originally posted in my blog!

Few weeks before, I launched a hobby project called Local Packet Whisperer to explore on the possibility of how LLMs can be used in Wireshark pcap/pcapng analysis. I drew inspiration from a similar project named Packet Buddy where the author depended on OpenAI models to perform pcap analysis. However in LPW I resorted to various opensource models available from hugging face 🤗 and served via projects like Ollama 🦙.

In this post, let me give a brief overview of this project, its feature set and how to use it. In addition to this, over the weekend, I played with many local models along with different packets for fun. So I’ll be sharing those experiments and their interesting results as well. So go grab a coffee ☕️ and enjoy the ride 🎢

LPW Basic building blocks

LPW depends on Ollama, Streamlit & PyShark to chat with PCAP files locally, privately! 😎

For the nonstarters, Ollama is an opensource project, based on llama.cpp which democratizes local LLM inference in consumer hardware. Using Ollama one can childishly deploy and run LLM inference locally. They made the experience so intuitive to resonate with docker 🐳 commands. For eg ollama pull mistral:latest would simply pull the mistral GGUF architecture model from Ollama hub. ollama run mistral:latest would simply run the model inference point which could be used to chat with the model.

On the other hand, Streamlit is a popular python framework to serve stylish and modern UI frontend for many datascience projects. The reason why it is quite popular is based on the fact that, any novice python developer can simply spin-up a nice looking UI with just python, without getting coached on angular or react. Hence Streamlit was a perfect choice to serve the front end for LPW

Lastly, there is this PyShark. As you can imagine, this is the pythonic way of dealing with pcap and pcapng files respectively. PyShark is simply a wrapper to call Tshark which is an utility that gets installed as part of Wireshark.

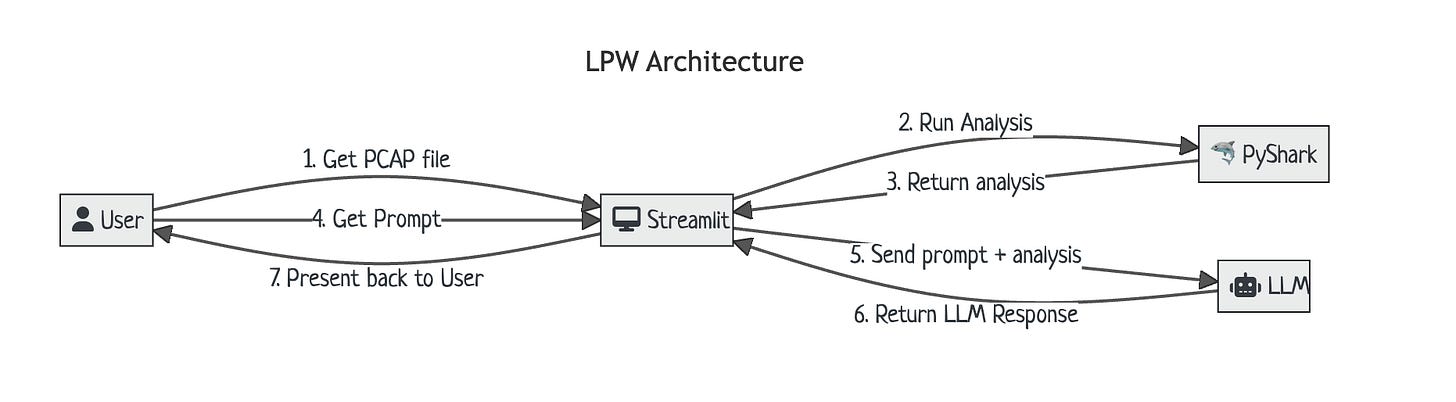

Following diagram shows how different components interact to make LPW, a reality!

Features 🪄:

✅ 100% local, private 🔒 PCAP assistant powered by range of local LLMs at your control, powered by Ollama 🦙

✅ LPW is now available as a pip package 😎. Just pip it away 🤌🏻

Just install ollama as usual and rest of the installation & execution is now handled by LPW itself. To install lpw using pip 🐍, execute

pip install lpwand then uselpw {start | stop}to whisper to your lpw 🗣️.

✅ LPW now supports streaming 🏄🏻♂️

There is a new settings page ⚙️ in which you can toggle streaming response. Also you can customize the system prompt directly and flex your prompt engineering skills

✅ LPW now supports PCAP-NG format 👨🏻💻. Thanks to a community user for asking this 🤗

Fun part : Experiments with LPW

If you are reading this, it means you are certainly interested in LPW. Welcome aboard! Lets get into the fun part. Like I expressed in the introduction portion, over this weekend, I played with many local models along with different packets for fun. I have to say the experience is truly rewarding 😇.

With right nudges 👉🏻 (aka prompts), I strongly feel we can make LLMs to inspect and troubleshoot complex network scenarios and automate network changes like we imagined. In this section, I will present my findings. So lets start 🚀

To start with, I gave the free5GC packet capture and I asked LPW to identify what is the gNodeB name. With right hints, mistral-7B model, got it in the first attempt! Refer Figure a

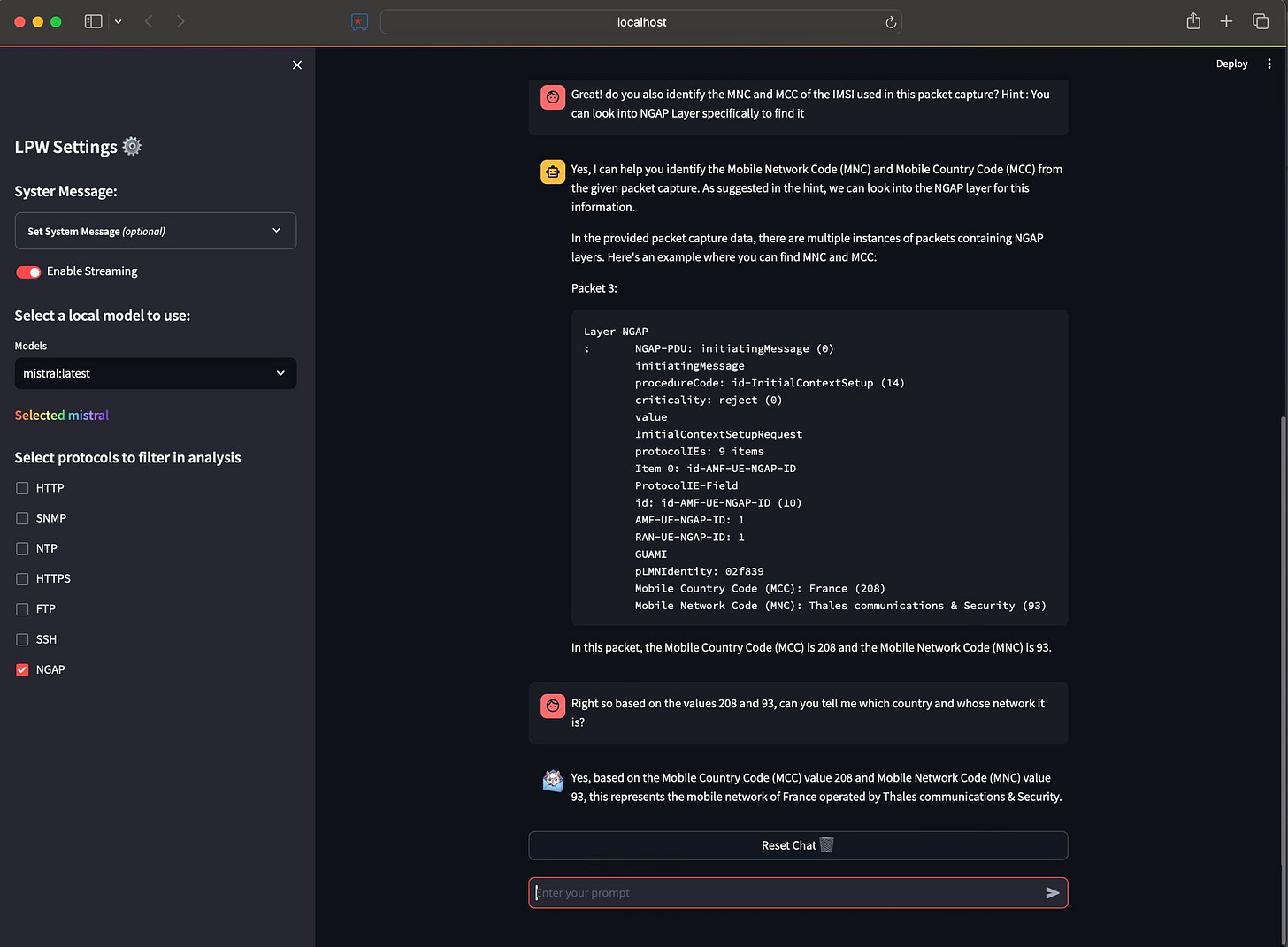

Next, I asked LPW to find the PLMN portion of the IMSI by educating it about MNC and MCC. This time, it couldn’t yet fully answer, but at least found the portion of the packet capture that has this info. Good enough, but not correct. Next I further nudged it to use the value of MNC and MCC and this time it picked it. Refer Figure b

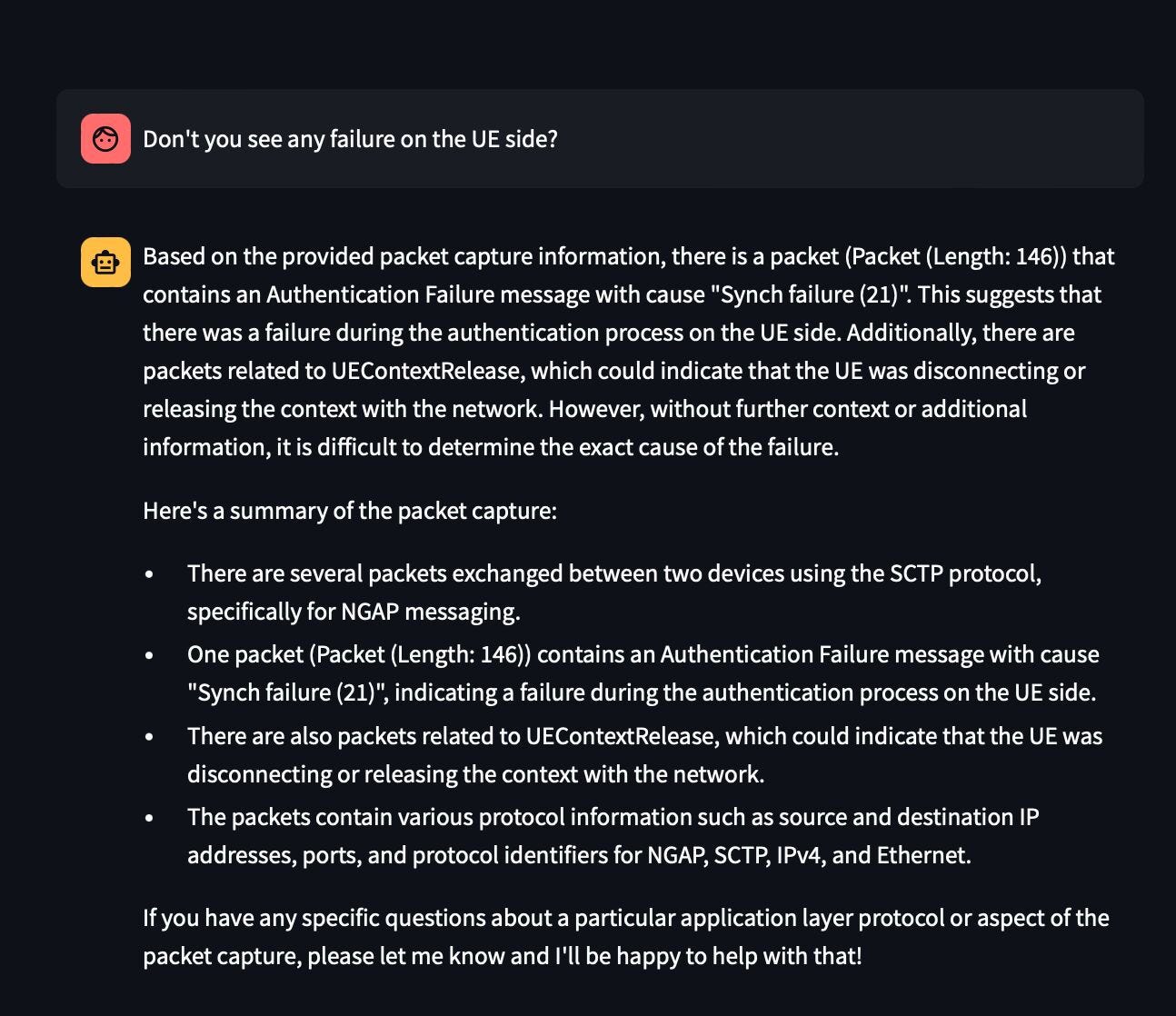

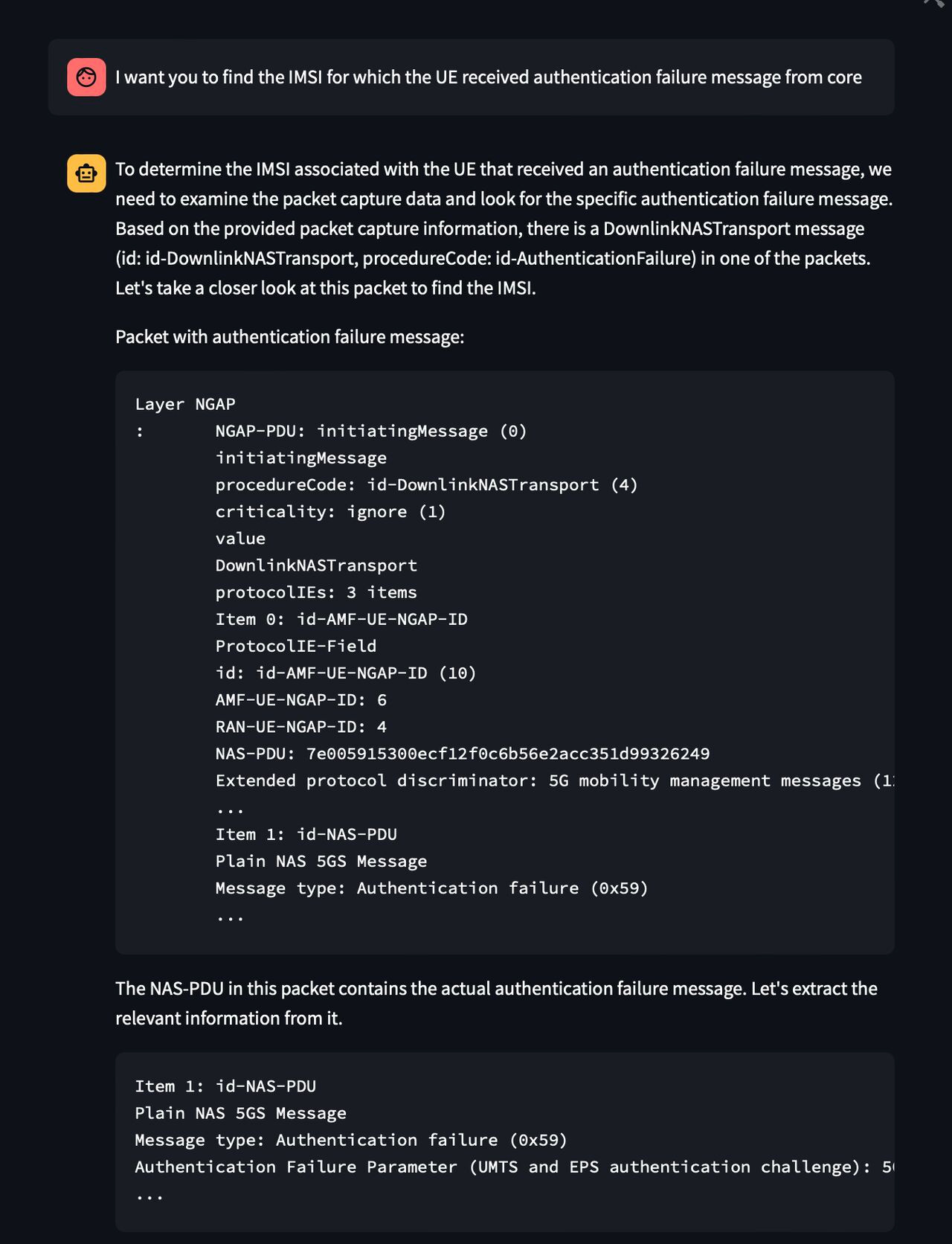

This is not even RAG, just session memory with little bit of hand holding. Encouraged by this experiment, I shifted gears and captured a real error scenario using my local lab involving Open5Gs(A popular opensource 5GC implementation) and UERANSIM (A popular 5G gNodeB/UE Simulator). In this experiment, I willfully created an auth error by editing the OPc value and captured this transaction. When I fed this pcap and asked for any UE errors, LPW was indeed able to spot it in one go! Refer Figure c

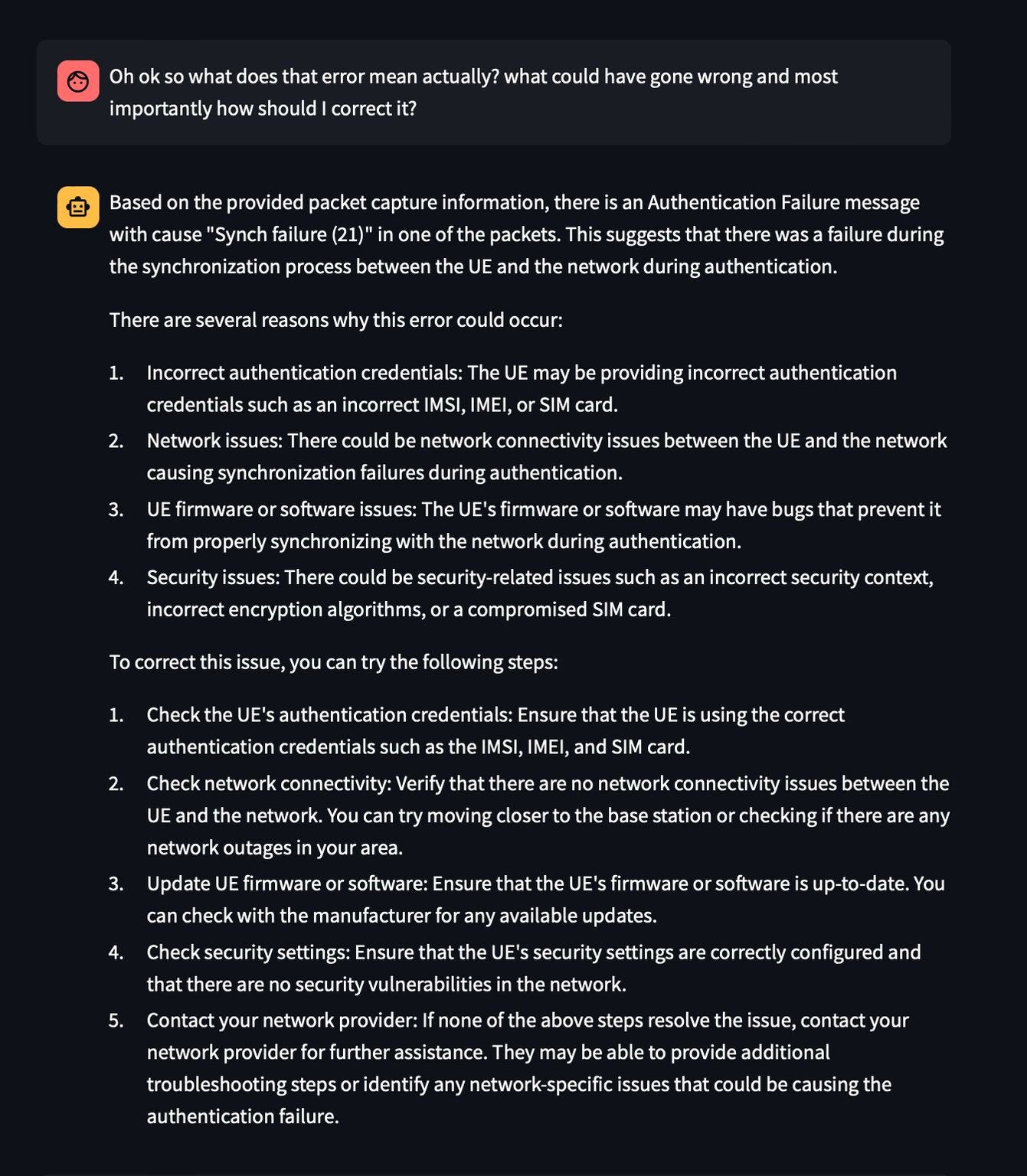

Amazed by this, I asked the model to explain me what is this error and how one can resolve this in the field. As expected, the model breezed this question. Refer Figure d

Next I asked the model to find the impacted IMSI due to this auth error. While the model could successfully identify the right packet, it couldn’t clearly narrow down the IMSI. Refer Figure e

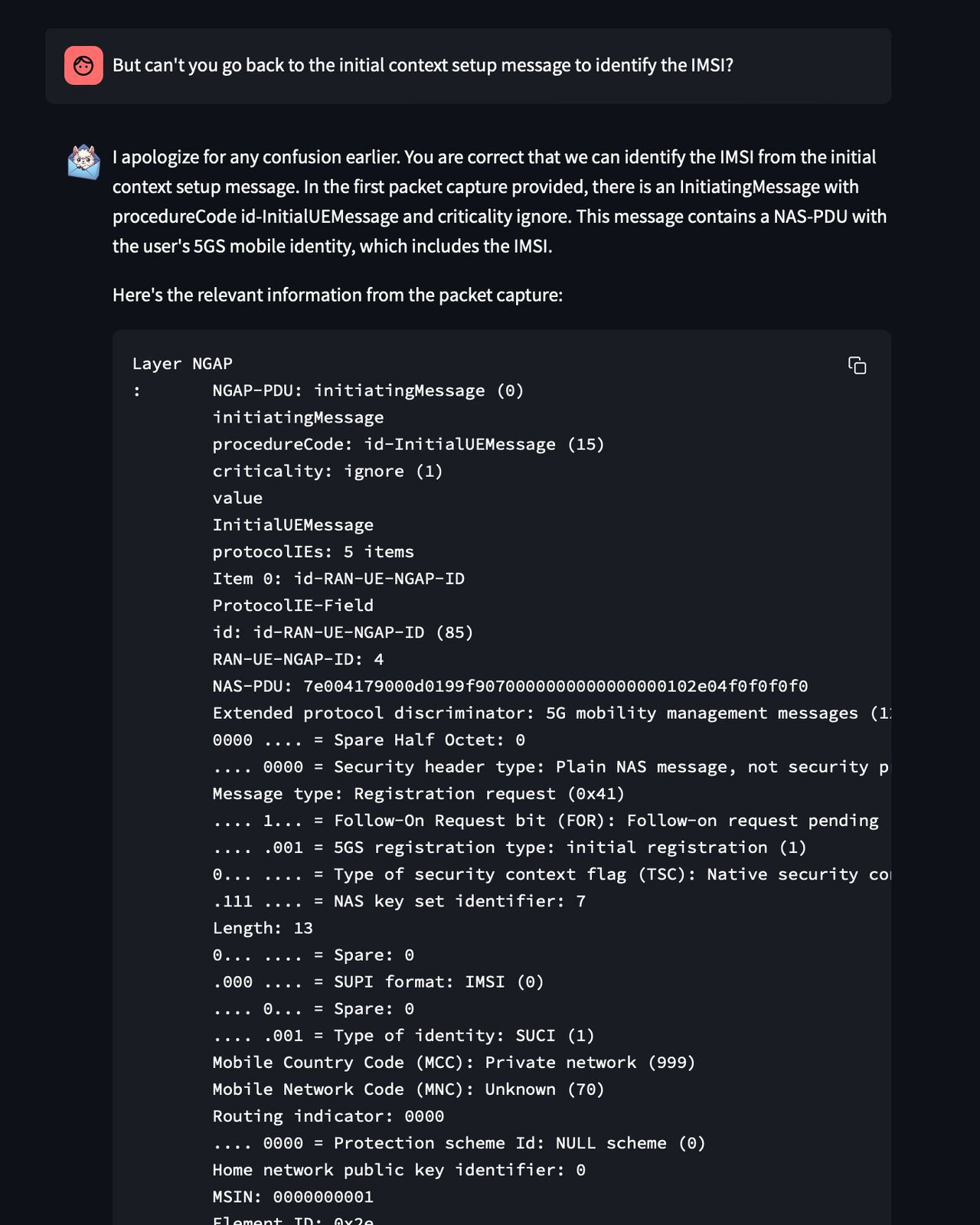

Again I nudged it by asking the model to look back on the initial packet for that information. To my surprise, the model started well with its analysis (like how a network engineer would do) and started to trace back the packets Refer Figure f

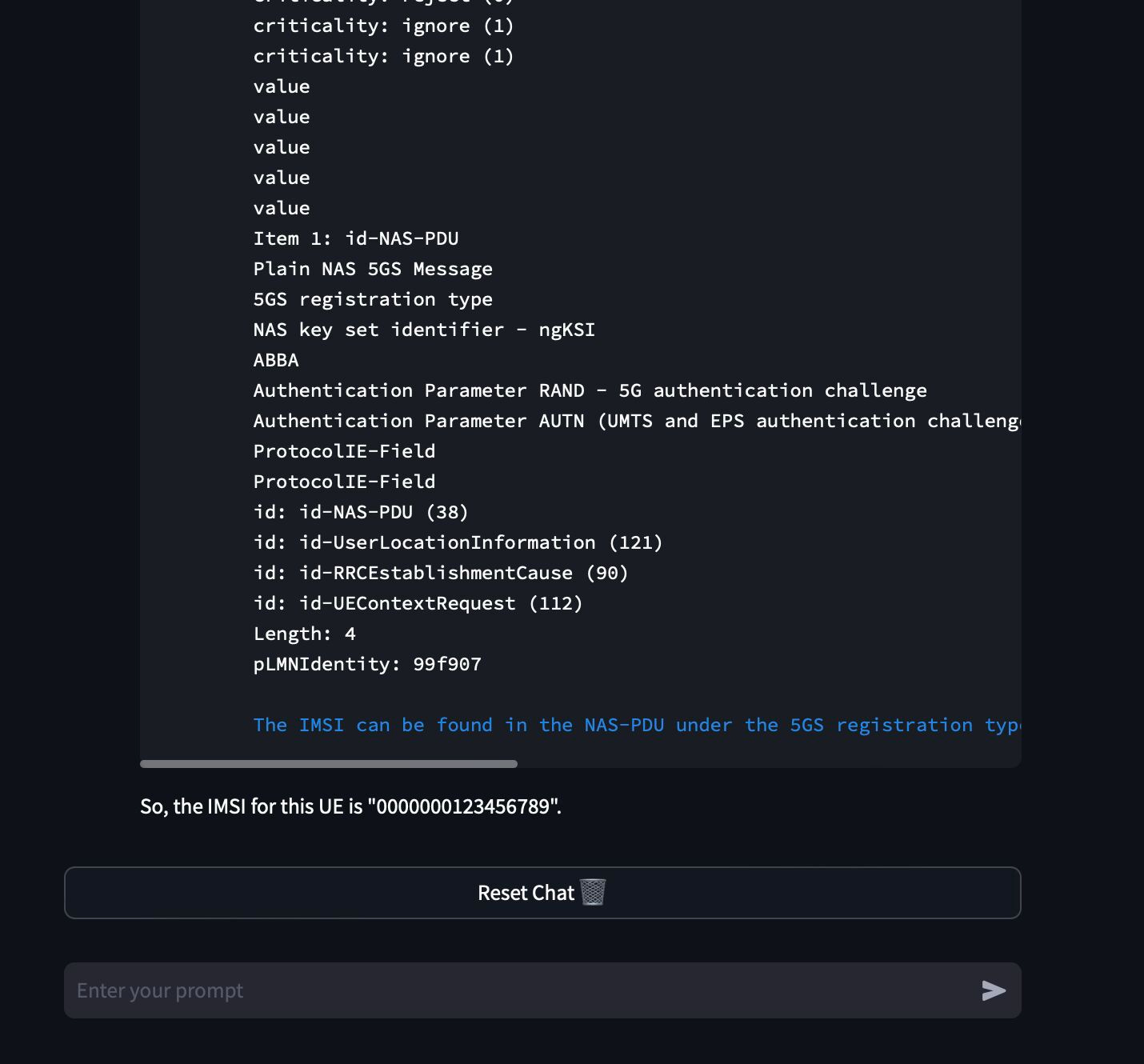

While it nearly found the initial message that has this IMSI information, it finally hallucinated to some random IMSI value 😵💫. Refer Figure g

One possible reason could be, I polluted the context with too much info, since I never used any RAG technique with sophisticated vector store etc. But honestly this performance on a local LLM is simply unimaginable.

What’s next? & Resources!

So What’s next? Well, the Next step is to enable the RAG pipeline and see if it improves anything. Stay tuned if you are interested in learning more 😎

If you are curious about this project, you can find the complete guide to install & use LPW here. Furthermore, your comments, issues & PRs are always welcome. Also don’t forget to ⭐️ the repo! 🤗

Excellent tool. Tried it on the nitroba challenge and it worked a dream.